- Hungry Minds

- Posts

- 🍔🧠 Netflix's 3-Tier Cache System That Powers 700M Hours Daily

🍔🧠 Netflix's 3-Tier Cache System That Powers 700M Hours Daily

PLUS: Caching Explained in 5 Minutes 🗝️, AI Agents Starter Kit 🧰, Time Management for Engineers ⏳

Happy Monday! ☀️

Welcome to the 534 new hungry minds who have joined us since last Monday!

If you aren't subscribed yet, join smart, curious, and hungry folks by subscribing here.

📚 Software Engineering Articles

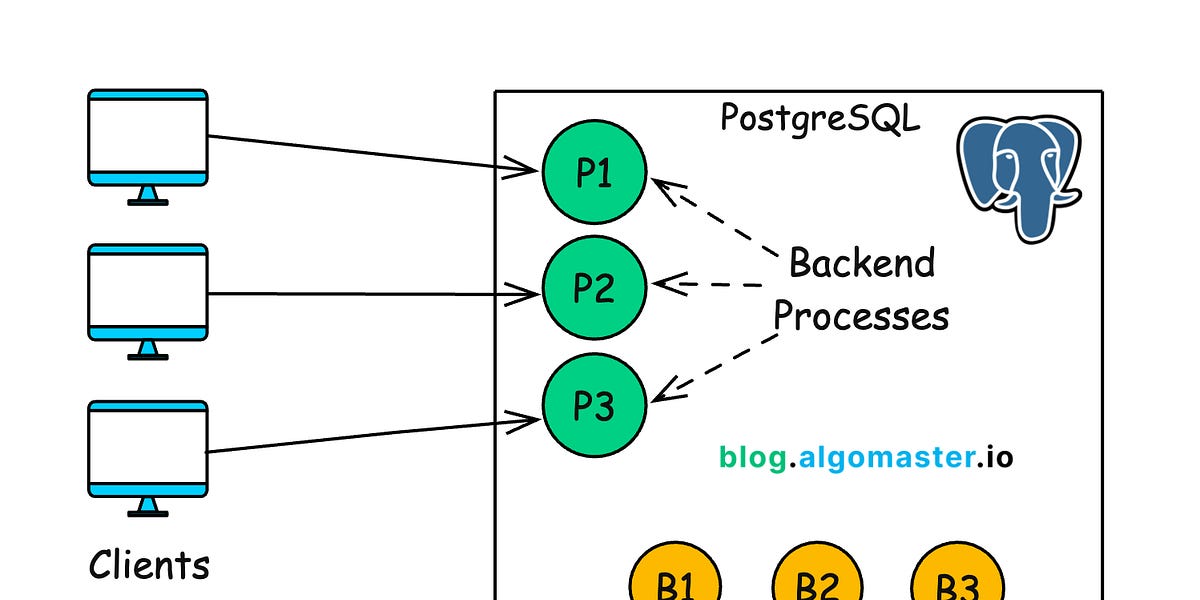

Master PostgreSQL with this internal architecture deep dive

Netflix reveals how they accurately track eBPF flows

Microsoft releases beginner-friendly AI agents guide

Learn every caching strategy in just 5 minutes

Discover traits of exceptional programmers from real experiences

🗞️ Tech and AI Trends

Meta unveils Llama 4, pushing multimodal AI boundaries

ChatGPT now has persistent memory across all conversations

Samsung's AI robot Ballie arrives with Gemini integration

👨🏻💻 Coding Tip

Use Docker's

--mountinstead of-vfor clearer volume management

Time-to-digest: 5 minutes

Big thanks to our partners for keeping this newsletter free.

If you have a second, clicking the ad below helps us a ton—and who knows, you might find something you love.

💚

The #1 AI Meeting Assistant

Still taking manual meeting notes in 2025? Let AI handle the tedious work so you can focus on the important stuff.

Fellow is the AI meeting assistant that:

✔️ Auto-joins your Zoom, Google Meet, and Teams calls to take notes for you.

✔️ Tracks action items and decisions so nothing falls through the cracks.

✔️ Answers questions about meetings and searches through your transcripts, like ChatGPT.

Try Fellow today and get unlimited AI meeting notes for 30 days.

Netflix powers 15% of global internet traffic and streams 94 billion hours of content globally. Their sophisticated distributed system architecture enables instant, uninterrupted video delivery to millions of concurrent viewers across 190 countries through cloud services and custom content delivery networks.

The challenge: Building a globally distributed system that maintains sub-second latency and high reliability while streaming HD video to millions of concurrent users, handling 15% of global internet traffic.

Implementation highlights:

Two-plane architecture: Control plane on AWS for browsing/recommendations, data plane (Open Connect) for video delivery

Custom CDN network: Purpose-built Open Connect servers placed directly within ISP networks

Microservices at scale: 700+ services enabling independent scaling and deployment

Chaos engineering: Tools like Chaos Monkey randomly break things to ensure resilience

Sophisticated data pipeline: Real-time analytics processing millions of events/second

Results and learnings:

Global reach: Successfully streams to 301M+ subscribers across 190 countries

Reliability: Maintains 99.99% availability despite massive scale

Optimization: Reduced bandwidth needs by 20-40% through per-title encoding

Netflix's architecture shows how thoughtful system design can solve incredibly complex technical challenges a massive scale. Their willingness to build custom solutions rather than accept off-the-shelf limitations demonstrates how architecture can be a competitive advantage.

Remember: Even Netflix's system isn't perfect. Just ask anyone who tried to watch the Tyson vs Paul fight! Sometimes the best architectures still buffer.

ARTICLE (docs-or-it-didnt-happen)

Why Documentation Fails Developers

GITHUB REPO (ai-for-noobs)

10 Lessons to Get Started Building AI Agents

GITHUB REPO (zoom-zoom-multiplayer)

Multiplayer at the speed of light

ARTICLE (js-ninja-tricks)

Hiding elements that require JavaScript without JavaScript

ARTICLE (css-wizardry)

Item Flow, Part 1: A new unified concept for layout

ARTICLE (db-drama)

Database Protocols Are Underwhelming

ARTICLE (ai-test-fightclub)

Comparing AI Unit Test Generators on TypeScript: Tusk vs. Cursor Agent vs. Claude Code

ARTICLE (postgres-go-brrr)

PostgreSQL BM25 Full-Text Search: Speed Up Performance with These Tips

ARTICLE (netflix-spy-mode)

How Netflix Accurately Attributes eBPF Flow Logs

Want to reach 180,000+ engineers?

Let’s work together! Whether it’s your product, service, or event, we’d love to help you connect with this awesome community.

Brief: Meta launches Llama 4 Scout (17B params, 10M-token context) and Llama 4 Maverick (beats GPT-4o/Gemini 2.0), both open-weight and multimodal, while teasing the training of Llama 4 Behemoth, a 288B-parameter powerhouse.

Brief: Midjourney's V7 Alpha introduces model personalization by default, Draft Mode for rapid iteration, and Turbo/Relax modes, offering higher-quality textures, better coherence, and 10x faster rendering for AI-generated art.

Brief: Google’s new Ironwood TPU shifts focus from training to AI inference, tackling soaring costs with double performance per watt and 6x more memory than its predecessor.

Brief: Samsung’s Ballie rolling companion will debut Gemini AI integration in May, promising smarter home automation and voice-controlled tasks in its latest revival.

Brief: OpenAI upgrades ChatGPT’s memory to reference past conversations by default, raising privacy concerns but enabling more personalized responses for Plus and Pro users.

This week’s coding challenge:

This week’s tip:

Use Docker's --mount flag instead of -v for explicit and more maintainable volume declarations. The --mount syntax is more verbose but self-documenting and supports additional options like bind-propagation and readonly.

Wen?

Microservice deployments: Clearly document volume requirements in deployment scripts with explicit mount options.

Shared development environments: Prevent accidental writes with readonly flags and ensure consistent mount behavior across teams.

Production systems: Better control over volume properties and easier debugging of mount-related issues.

If you don't read the newspaper, you're uninformed. If you read the newspaper, you're misinformed.

Mark Twain

That’s it for today! ☀️

Enjoyed this issue? Send it to your friends here to sign up, or share it on Twitter!

If you want to submit a section to the newsletter or tell us what you think about today’s issue, reply to this email or DM me on Twitter! 🐦

Thanks for spending part of your Monday morning with Hungry Minds.

See you in a week — Alex.

Icons by Icons8.

*I may earn a commission if you get a subscription through the links marked with “aff.” (at no extra cost to you).