Today’s issue of Hungry Minds is brought to you by:

Happy Monday! ☀️

Welcome to the 493 new hungry minds who have joined us since last Monday!

If you aren't subscribed yet, join smart, curious, and hungry folks by subscribing here.

📚 Software Engineering Articles

Master cloud costs with this resource optimization guide

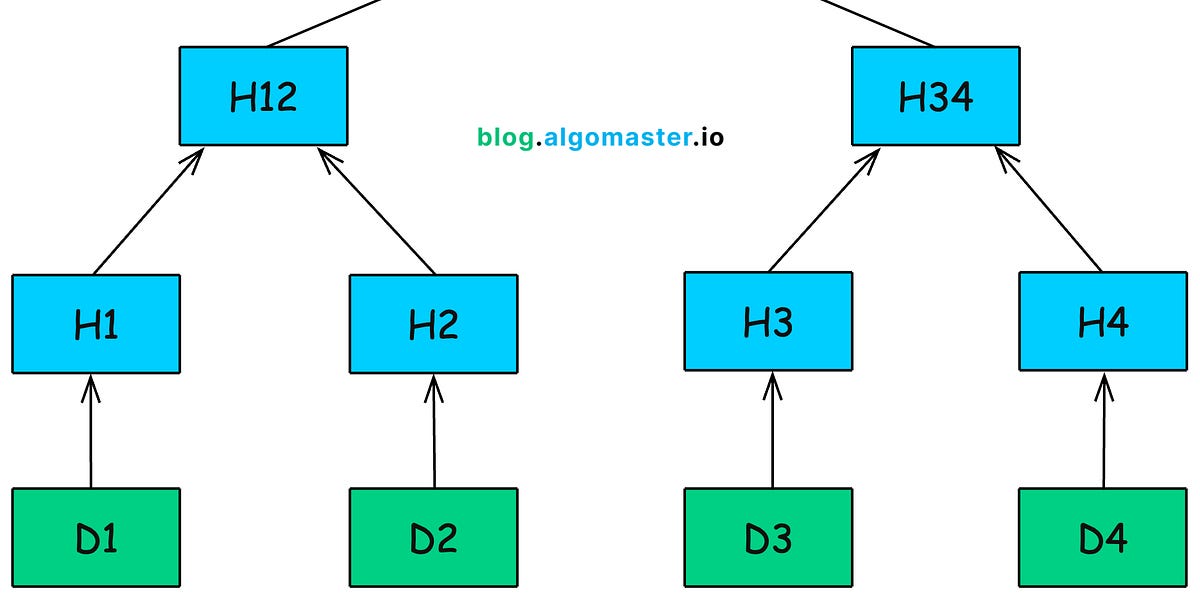

Explore 15 data structures powering modern distributed databases

SQLite for servers performs surprisingly well at scale

Learn to conduct effective performance reviews as an engineering leader

Amazon Principal Engineer shares insights on tech career growth

🗞️ Tech and AI Trends

OpenAI launches GPT-4.5 'Orion', their most powerful model yet

Perplexity announces AI-powered browser Comet

Claude AI plays Pokémon live on Twitch

👨🏻💻 Coding Tip

What are

Rustlifetimes and how do they work?

Time-to-digest: 5 minutes

Big thanks to our partners for keeping this newsletter free.

If you have a second, clicking the ad below helps us a ton—and who knows, you might find something you love. 💚

Industry-leading voice models featuring 40ms latency and best-in-class voice quality

Instant cloning with 3 seconds of audio, voice changer allowing for fine-grained control, and audio infilling with templated scripts to generate personalized content, at scale

Launch voice agents with only 50 lines of code

When dealing with time series data, you often end up with lots of repeated information. A public transport API in Paris was storing 10GB of JSON data about service disruptions, with new entries added every 2 minutes. Could we make this more efficient?

The challenge:

Compressing time series data while keeping it queryable is hard, as you need to balance size reduction with access speed and data structure complexity.

Implementation highlights:

Used

interningpattern to deduplicate repeated data by storing unique values in lookup tablesApplied specialized data structures for UUIDs and timestamps instead of strings

Optimized serialization with delta encoding and run-length encoding for and compression (

gzip/brotli/xz) as the final optimization layer

Results and learnings:

Achieved 2000x size reduction (from 1.1GB to 530KB) while keeping data queryable

Interning works best when applied broadly across all data types, not just strings

The resulting system is effectively a simple append-only database perfect for time series

This was a pretty nice one imo. At least re-building the database with Rust got the data less disrupted than the actual service 🤣

ESSENTIAL (AI needs a makeover)

Rethinking LLM Inference: Why Developer AI Needs A Different Approach

ESSENTIAL (money talks, tech walks)

Trimodal Nature Of Tech Compensation In The US, UK And India

GITHUB REPO (compose yourself)

Composio equip's your AI agents & LLMs with 100+ high-quality integrations via function calling

ARTICLE (C is for JSON parsing)

Abusing C to implement JSON Parsing with Struct Methods

ARTICLE (cross-site shenanigans)

Cross-Site Requests

ARTICLE (typo-taming AI)

Using AI in the Browser for Typo Rewriting

ARTICLE (CSS is the real MVP)

Knowing CSS is mastery to Frontend Development

ARTICLE (code reviews: the art of nitpicking)

How to Do Thoughtful Code Reviews

ARTICLE (API building blocks)

Building APIs with Next.js

ARTICLE (state transitions: the React dance)

{transitions} = f(state)

Want to reach 150,000+ engineers?

Let’s work together! Whether it’s your product, service, or event, we’d love to help you connect with this awesome community.

Brief: OpenAI's Deep Research System Card details rigorous safety testing and risk evaluations for its new model, emphasizing privacy protections and mitigations against potential threats.

Brief: Perplexity announces its upcoming web browser Comet, aiming to reinvent browsing while inviting users to sign up for updates, amidst a competitive landscape.

Brief: DeepSeek aims to accelerate the release of its next-gen R2 AI model, promising enhanced coding skills and multilingual reasoning, following the success of its R1 model.

Brief: Anthropic's Claude 3.7 Sonnet is now live on Twitch, attempting to play Pokémon Red, showcasing its AI reasoning skills while amusingly struggling with basic game mechanics.

This week’s coding challenge:

This week’s tip:

In Rust, lifetimes are a powerful feature that ensures references are valid for as long as they are used. They are particularly important when working with borrowed data to prevent dangling references. By explicitly annotating lifetimes, you can help the compiler understand the relationships between references and ensure memory safety.

Wen?

Borrowed Data: Use lifetimes when working with borrowed data (references) to ensure that the data remains valid for the duration of its use, preventing dangling references.

Structs with References: Essential when defining structs that hold references, as you need to specify how long the referenced data should live to avoid invalid memory access.

Complex Function Signatures: Useful in functions that take multiple references and return a reference, ensuring the returned reference is valid for the appropriate scope.

"Do not ignore your gift. Your gift is the thing you do the absolute best with the least amount of effort."

Steve Harvey

That’s it for today! ☀️

Enjoyed this issue? Send it to your friends here to sign up, or share it on Twitter!

If you want to submit a section to the newsletter or tell us what you think about today’s issue, reply to this email or DM me on Twitter! 🐦

Thanks for spending part of your Monday morning with Hungry Minds.

See you in a week — Alex.

Icons by Icons8.

*I may earn a commission if you get a subscription through the links marked with “aff.” (at no extra cost to you).